The 12th EALTA Conference in Copenhagen, Denmark

Review of the 12th EALTA Conference, Copenhagen, Denmark – 28 - 31 May 2015

Esra Özoğul Spiby & Richard Spiby

The annual EALTA conference was held at the University of Copenhagen this year. Very enjoyable it was too, although it was good to hear that the Istanbul conference of two years ago was still remembered with fondness by many of the participants. The focus of the conference this year was the link between policy and practice in language testing. Policy-making here was defined quite broadly, not just at a governmental or national level but also in terms of issues such as language testing for university entry and acceptance to education and training in general. This meant that as usual there were presentations from universities around the world. Although many were highly technical validation studies of institutional exams, most had some relevance to our context at SU and we’ll outline some of the most thought-provoking ones below.

The annual EALTA conference was held at the University of Copenhagen this year. Very enjoyable it was too, although it was good to hear that the Istanbul conference of two years ago was still remembered with fondness by many of the participants. The focus of the conference this year was the link between policy and practice in language testing. Policy-making here was defined quite broadly, not just at a governmental or national level but also in terms of issues such as language testing for university entry and acceptance to education and training in general. This meant that as usual there were presentations from universities around the world. Although many were highly technical validation studies of institutional exams, most had some relevance to our context at SU and we’ll outline some of the most thought-provoking ones below.

Formative Assessment

In the opening plenary, Ofra Inbar-Lourie from Tel Aviv University addressed some issues related to formative assessment and considered why there is often such a discrepancy between policy – what countries and institutions claim to be doing and their statements of intent – and what actually happens in practice. There were great hopes for formative assessment as a way of complementing existing assessment procedures, but this has not always worked out. Some of the main problems were identified:

•The aims of summative assessment can interfere with and even compete with those of formative assessment

•Teachers are often not trusted by their governments and/or institutions to have the ability to implement formative assessment goals.

•Formal assessment has never been adequately defined.

•Its methodology has not been adequately described.

•The assessment aspect of formal assessment has not been properly developed.

•A false distinction is often made between formative and summative assessment.

•The CEFR is heavily biased towards summative assessment.

However, perhaps the main issue to be dealt with in formative assessment is that of culture within an institutional and social context. The existing cultural conditions determine how much change is necessary to implement formal assessment effectively. Cultural change is essential, otherwise formative assessment cannot be successful and, importantly, cannot be sustainable over the long term. This is because people tend to fall back on their own cultural norms and ways of behavior if formal assessment is not contextualized and presented to them properly (this applies to both teachers and students). Language assessment needs to be situated, in that it takes place in a particular multilingual setting, with access to a set of digital resources, and oriented towards learning in a particular context. Therefore, for formal assessment to work, the local context must be considered carefully and adapted to. Students need to feel comfortable in asking questions and negotiating, while teachers (and students) need to have a good level of language assessment literacy. This means that everyone can understand the possibilities and risks that formative assessment can bring, and the transparency which is vital to the process becomes possible. When these conditions are fulfilled, then assessment can be said to serve and aid learning.

Technology

Dan Joyce from the Eiken Foundation in Japan explained how technology could be used to solve a specific assessment problem. For the university English entrance exam in Japan a multi-stage speaking test had been developed which was found to work well as a test of spoken academic English. However, with developments in the Japanese system, the need arose for the test to be able to deal with very large numbers of students quickly, reliably and at reasonable cost. The main problem was that some form of double marking was required, while the results needed to be processed in a short amount of time. This meant that the examiner implementing the test also needed to provide a score for each test-taker while also administering a quite complex test with four distinct stages:

•Interview

•Role play

•Monologue

•Extended Interview

Since the test had been validated and had been found to be fit for purpose, an iPad application was developed so that the examiner would be able to administer the test comfortably and also give reliable grades. Basically, the iPad is used to handle all administrative details. These include:

•ID checks using test-taker QR codes

•Providing task instructions

•Strict timing of each exam section

•Audio recording of student response

•Recording the examiner’s score

As the information is then stored digitally, it can then be used (for example, recordings can be assigned to second graders appropriately) and analysed easily in terms of statistics. The system has been trialled and improved and the results of working with the application seem to be very positive. This seems to be a good example of innovation used to address a problem arising from a particular testing context.

Reading

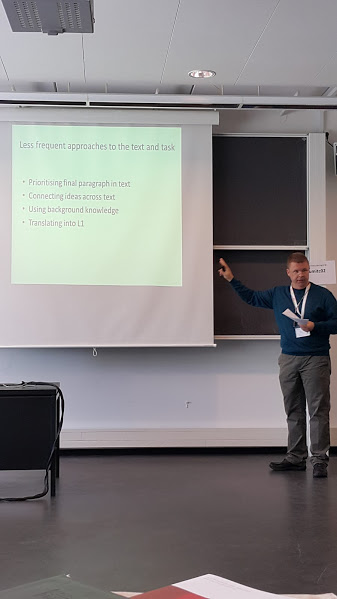

At the Special Interest Groups for Assessing Writing and Assessment for Academic Purposes, a fascinating presentation was given by Richard Spiby ☺, who reported on his research into test-taker performance and strategies in tests of expeditious (skimming) and careful (detailed) reading at Sabancı University in Turkey. This study was part of the Reading MA ELT distance program and was awarded a distinction.

Richard claimed that despite an abundance of research into the nature of second language reading, there is little consensus on the components or processes that it involves. In the field of language testing, this debate continues over the existence of separate testable reading components. His study investigated the behaviour of 88 B1+/B2 level students and 3 teachers at SU during the completion of two academic reading subtests, one of quick and selective ‘expeditious reading’ and one of ‘careful reading’, involving detailed understanding of important ideas, both developed with reference to the framework of Urquhart and Weir (1998). After the tests, quantitative and qualitative data were collected through semi-structured questionnaires with students and open-ended, retrospective interviews with 10 students and 3 teachers. From this data, test-taker behaviour and strategy use were compared and the underlying mental processes of the test-takers as they completed the two tests were investigated. The results of the study suggested that there were significant differences between several strategies used on the tests. In terms of the test-taker scores, there was a small but positive correlation between the two tests, reflecting the underlying reading ability of students in both tests but the tendency of students to show highly variable performance in either test. As for strategies, there were many differences between the two types of reading. In expeditious reading, test-takers tended to prioritise:

•looking for names and numbers

•looking for synonyms of words in items in the text

•using the title and subheadings

•the beginning & end of paragraphs

In careful reading, students tended to:

•guess vocabulary from context

•read all of a relevant paragraph slowly

•look at connections between sentences

•reread sections of text

•Read only item-related text

However, there was also considerable overlap in several areas, particularly in terms of the incorporation of expeditious strategies into careful reading, such as scanning for specific names and numbers and searching for topic-related keywords. The interview data revealed that test-takers tended to use expeditious reading skills as a short-cut to find answers quickly under exam conditions but were often forced to use careful reading skills when these did not work. Successful performers tended to show increased awareness of how successful their strategies were and when to try a new approach. These results have important implications for the testing of academic reading, particularly the importance of areas such as reading speed, strict control of test conditions, the level of test candidates’ metacognitive awareness, and the relation interactive compensatory theories of reading as they inform the academic reading construct. It appears that it is worthwhile testing different types of reading separately, but we should not be surprised if students try to answer them with similar strategies.

Vocabulary

Elke Peters, Tom Velghe and Tinne Van Rompaey from the University of Leuven in Belgium described their development of tests to determine vocabulary size in their learners. Since vocabulary level is a good predictor of general language proficiency, this is an especially important area in testing. Four different tests were developed to assess students’ range of vocabulary at different levels: up to 2000, 3000, 4000 and 5000 words, using a total of 30 items at each level. A cut-off score of 80% is used to determine whether the student is at the tested level. The care that needs to be taken in developing an effective test of this type was demonstrated in the detailed explanation of the test design. The presenters tried to improve on previous attempts to construct vocabulary size tests by:

•using only the most up-to-date British and American corpora as frequency reference points

•having a sufficient sampling rate (no. of items)

•excluding cognates which do not demonstrate acquired language knowledge

•taking into account word form and part of speech frequencies

•trying to eliminate guessing as much as possible

Different versions of the test at each level were trialled and analysed in terms of equivalence, discriminability and consistency. With some amendments to items, the data indicates that these tests are highly effective for use in the Belgian context.

Academic Listening

In the closing plenary, John Field considered the possible mismatch between the requirements of academic tests and the real-world listening needs of test-takers. The perspective he used to do this was through cognitive validity, in other words, by looking at the knowledge and processing of the listener while performing listening tasks. Such a psychological approach to listening has not been used a great deal in the field of applied linguistics but interest is now increasing.

In constructing a listening test, it is important to remember that the test cannot be completely authentic, rather the aim is to replicate the cognitive processes that the listener would use in a real-life situation. Thus, the question in terms of cognitive validity is “Is the listener thinking in the right way?”, which means that not only ‘language knowledge’ but also ‘language behaviour’ is being tested. As such, more emphasis needs to be put on identifying the listening construct in the test design stage rather than dealing with it through post hoc validation after the exam has been administered. Field identified three important considerations in listening test construction (also applicable to tests in general):

•construct relevance

•construct representation

•the extent to which the test is ‘tuned’ to the learner

Several ways of establishing validity were then outlined. For example, ‘expert’ language users (either native or non-native speakers) could be used as a model for the skills to be tested. Actual test-taker behavior in exam conditions should also be observed and candidates should report on how they answered particular items. Of course, the quality and authenticity of the listening text should also be carefully controlled.

The phases of the listening process, from lower level to higher skills:

•Decoding the input

•Searching for lexis

•Parsing sentences

•Constructing meaning

•Constructing discourse

Students need to develop automaticity at lower levels. Without this automaticity, they cannot devote cognitive resources to the higher level operations. Since this is something that students often have great problems with, we need to equip them with compensatory listening strategies so that they can cope. It is these higher level processes which are necessary for academic listeners, in which they need to use their knowledge of the topic and knowledge of the world in general and apply it to understanding the text. In terms of discourse, they need to understand the discourse structure, i.e., the importance of certain points, and the connections and comparisons between them, so that they can construct an overall hierarchical representation of ideas in the text, seeing how different points relate to each other.

On a practical level, Field also pointed out some problems with certain listening item formats which are worth considering. When the test-taker has the opportunity to preview items before the listening, they are actually receiving much more information than they would be in a real-world situation – they are in effect being given a summary of the text before listening to it, which in turn encourages test-wise strategies. In addition, some item types are used because they have been shown to be reliable and practical for testing purposes, but we need to be careful that they do not impose greater demands on the listener than in real-life listening contexts. The while-listening gap-fill is one type of item which divides the attention of the test-taker, meaning that they tend to focus more on the question than on the listening input. Research in this area is ongoing and should continue to produce useful and applicable data.

On a related topic, Franz Holzknecht from the University of Innsbruck reported on a research project in progress to investigate the cognitive validity of playing a listening text twice rather than once during a listening text. Previous research has been inconclusive and contradictory. Some studies have shown that repeating the input improves test scores but decreases the capacity of the test to discriminate, while others have suggested that it has little effect in reality. The question is asked as to whether the repetition affects the construct or listening operations, i.e., is the listener doing anything different the second time around. Interestingly, the research is using eye-tracking technology to investigate this, as the listener will provide a stimulated recall (using footage of eye motions on the exam paper simultaneous with the listening input), describing their behavior as they tried to answer the questions. While repeated listening seems to benefit responses to certain types of items, such as gap-fill, and appears to facilitate higher level processing in test-takers, the full impact on cognitive validity is unclear and it remains to be seen whether the research can provide insight into more than just test-taking strategies.

Overall, the conference was very stimulating and the quality of presentations was high, confirming EALTA as one of the leading language assessment conferences in the world. It was a fine reminder that there is a wide variety of research being conducted. This is diverse in terms of focus on different language and skills, in different countries, at all levels from individual classrooms to national and international education policy, and the use of both traditional and novel research methodology. Turkish institutions were well represented at the conference and it would be good to see more people from SU take an interest in current language assessment issues.